|

I am a Senior AI Researcher at Samsung Research America, where my research focuses on advancing multimodal understanding, editing, and generation for edge devices. My research aims to push the boundaries of AI by optimizing performance in resource-constrained environments. I earned my PhD in Computer Science from Johns Hopkins University under the supervision of Bloomberg Distinguished Professor Rama Chellappa. Prior to that, I completed my Master’s degree at the University of Maryland, College Park. Throughout my research, I collaborated closely with Tom Goldstein, Micah Goldblum, and Soheil Feizi at the University of Maryland, as well as Andrew Gordon Wilson and Yann LeCun from NYU and Meta. |

|

|

|

My primary research focuses on Multimodal and generative AI. Previously, I have worked on areas such as adversarial robustness, diffusion models, GANs, vision-language models, object detection and segmentation, as well as data poisoning and backdoor attacks. |

|

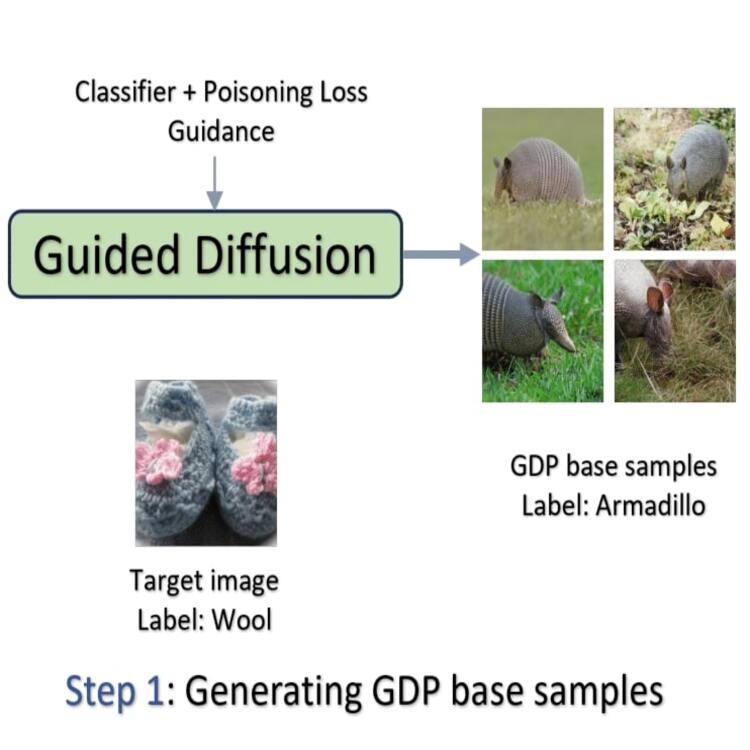

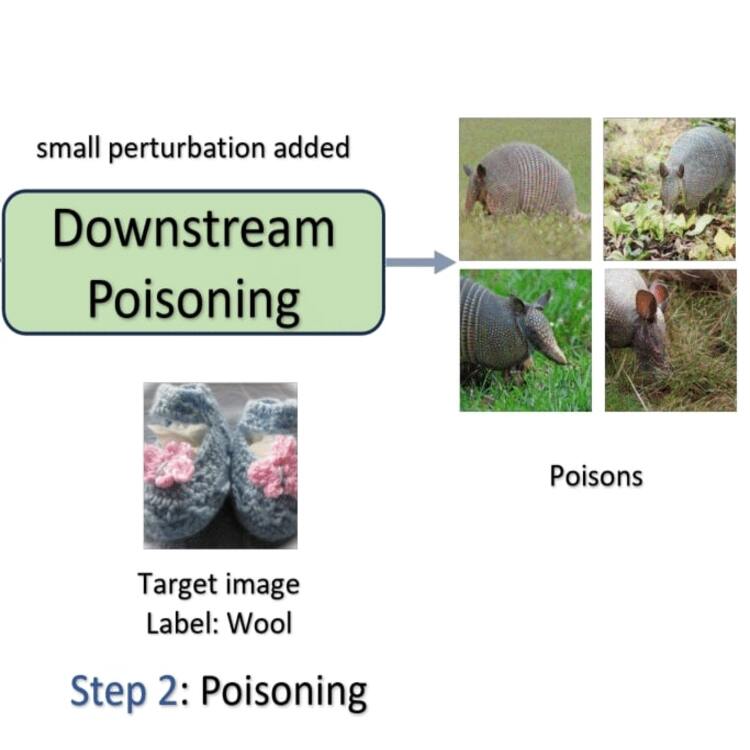

Hossein Souri, Arpit Bansal, Hamid Kazemi, Liam Fowl, Aniruddha Saha, Jonas Geiping, Andrew Gordon Wilson, Rama Chellappa, Tom Goldstein, Micah Goldblum ICML Workshop on the Next Generation of AI Safety, 2024 (Oral Presentation) PDF / arXiv / code In this work, we use guided diffusion to synthesize base samples from scratch that lead to significantly more potent poisons and backdoors than previous state-of-the-art attacks. Our Guided Diffusion Poisoning (GDP) base samples can be combined with any downstream poisoning or backdoor attack to boost its effectiveness. |

|

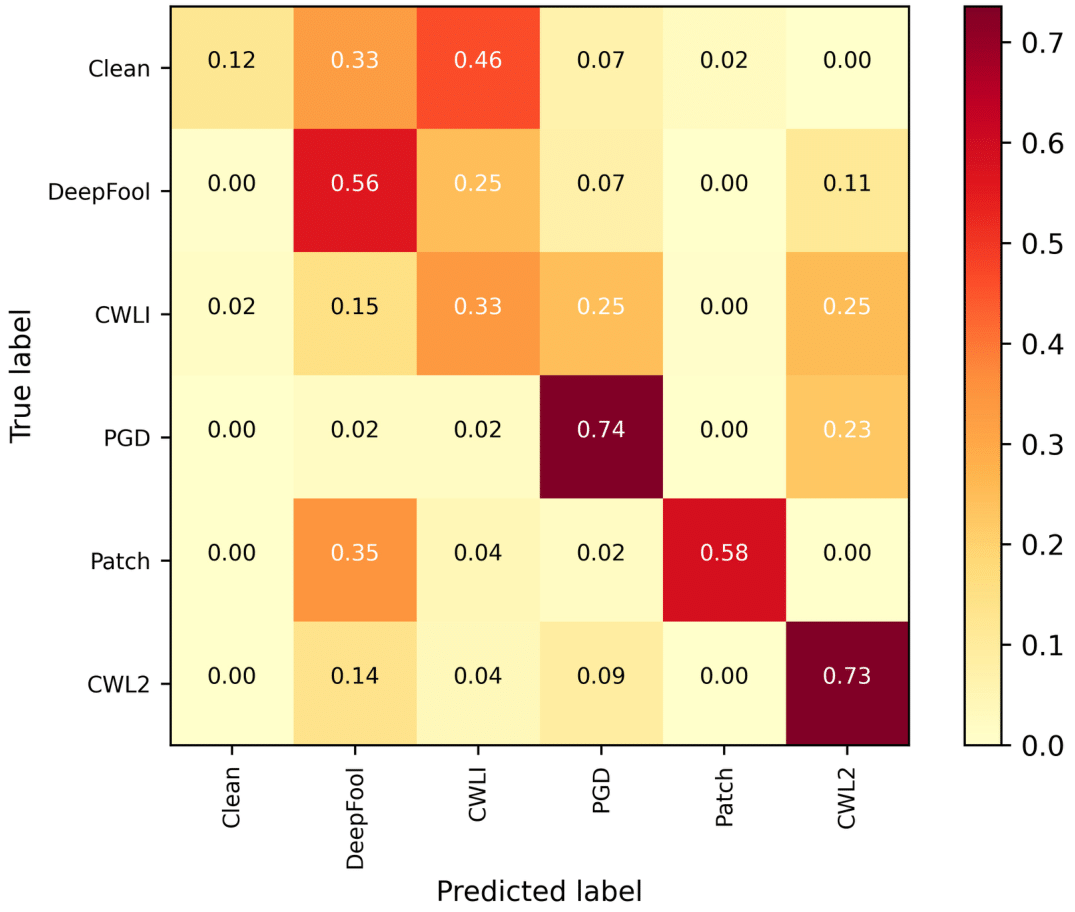

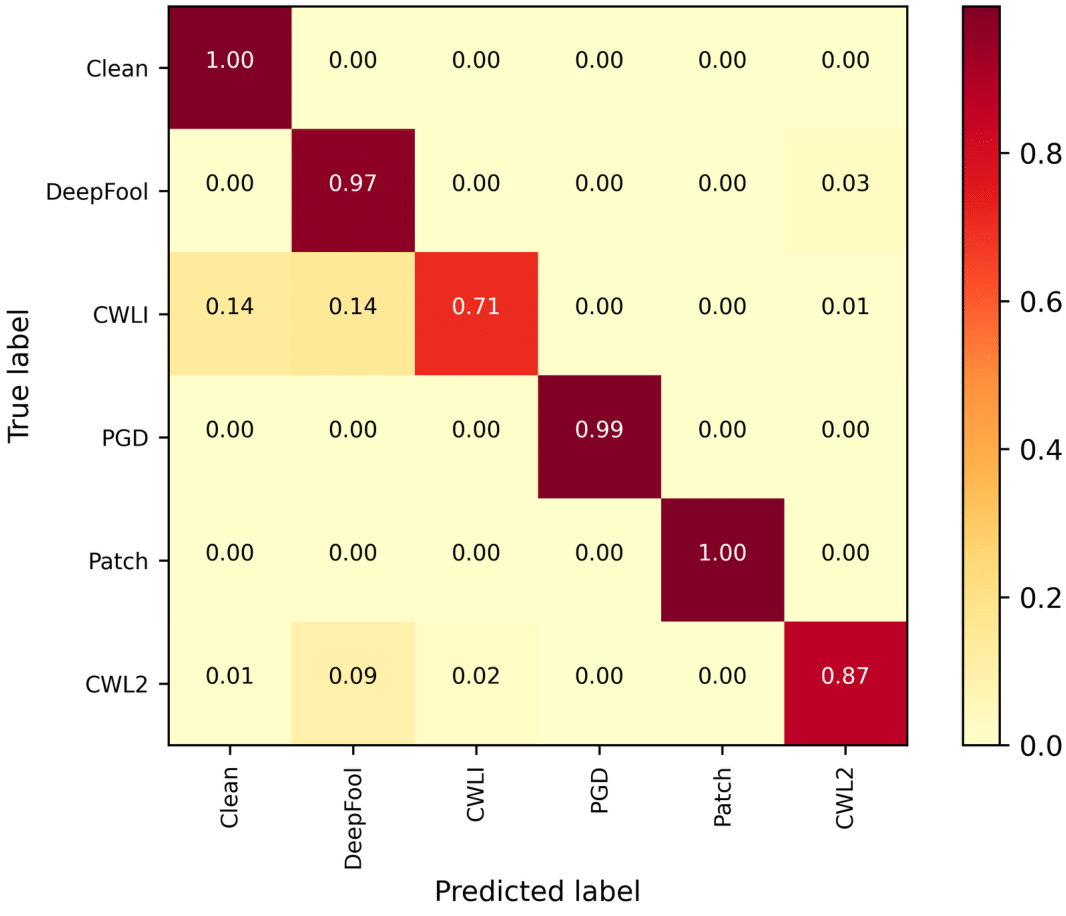

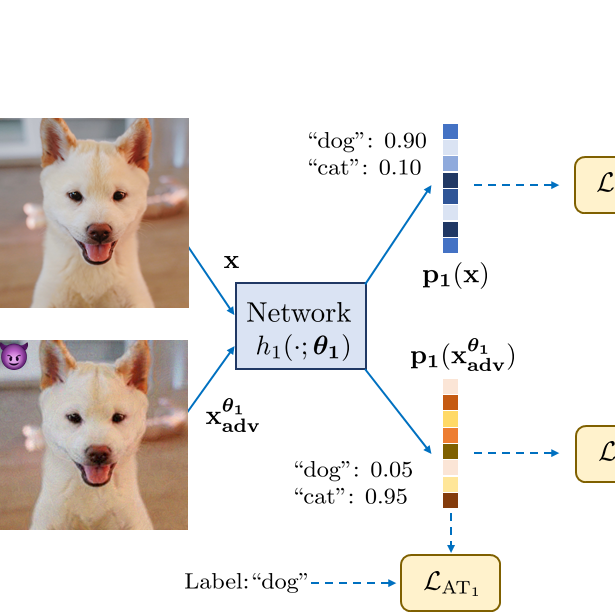

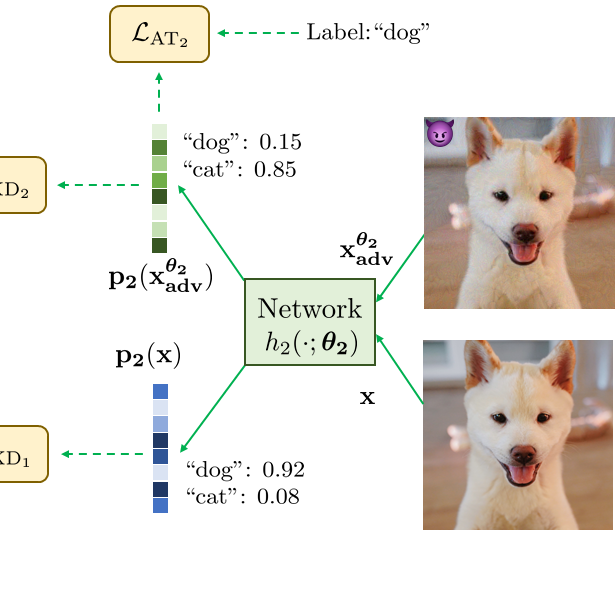

Hossein Souri, Pirazh Khorramshahi, Chun Pong Lau, Micah Goldblum, Rama Chellappa IEEE ICASSP, 2024 PDF / arXiv The adversarial attack literature contains a myriad of algorithms for crafting perturbations which yield pathological behavior in neural networks. In many cases, multiple algorithms target the same tasks and even enforce the same constraints. In this work, we show that different attack algorithms produce adversarial examples which are distinct not only in their effectiveness but also in how they qualitatively affect their victims. |

|

Micah Goldblum*, Hossein Souri*, Renkun Ni, Manli Shu, Viraj Prabhu, Gowthami Somepalli, Prithvijit Chattopadhyay, Mark Ibrahim, Adrien Bardes, Judy Hoffman, Rama Chellappa, Andrew Gordon Wilson, Tom Goldstein NeurIPS, 2023 PDF / arXiv / code Battle of the Backbones (BoB) is a large-scale comparison of pretrained vision backbones including SSL, vision-language models, and CNNs vs ViTs across diverse downstream tasks including classification, object detection, segmentation, out-of-distribution (OOD) generalization, and image retrieval. |

|

Chun Pong Lau, Jiang Liu, Hossein Souri, Wei-An Lin, Soheil Feizi, Rama Chellappa TPAMI PDF / arXiv In this paper, we propose a novel threat model called Joint Space Threat Model (JSTM), which can serve as a special case of the neural perceptual threat model that does not require additional relaxation to craft the corresponding adversarial attacks. We also propose Intepolated Joint Space Adversarial Training (IJSAT), which applies Robust Mixup strategy and trains the model with JSA samples. |

|

Valeriia Cherepanova, Steven Reich, Samuel Dooley, Hossein Souri, Micah Goldblum, Tom Goldstein AIES, 2023 PDF / arXiv In this paper, we explore the effects of each kind of imbalance possible in face identification, and discuss other factors which may impact bias in this setting. |

|

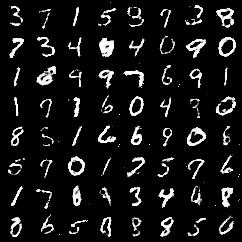

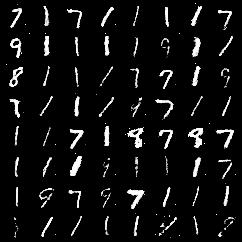

Hossein Souri, Liam Fowl, Rama Chellappa, Micah Goldblum, Tom Goldstein NeurIPS, 2022 PDF / arXiv / code Typical backdoor attacks insert the trigger directly into the training data, although the presence of such an attack may be visible upon inspection. We develop a new hidden trigger attack, Sleeper Agent, which employs gradient matching, data selection, and target model re-training during the crafting process. Sleeper Agent is the first hidden trigger backdoor attack to be effective against neural networks trained from scratch. We demonstrate its effectiveness on ImageNet and in black-box settings. |

|

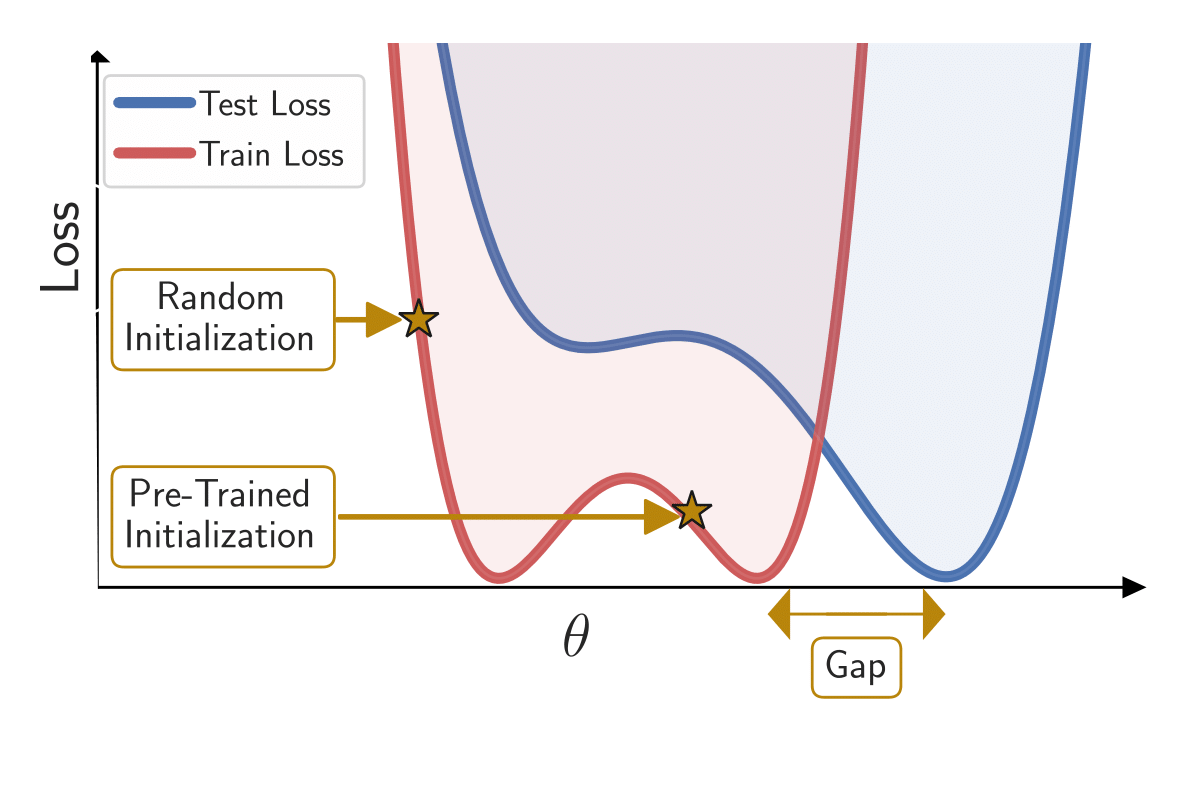

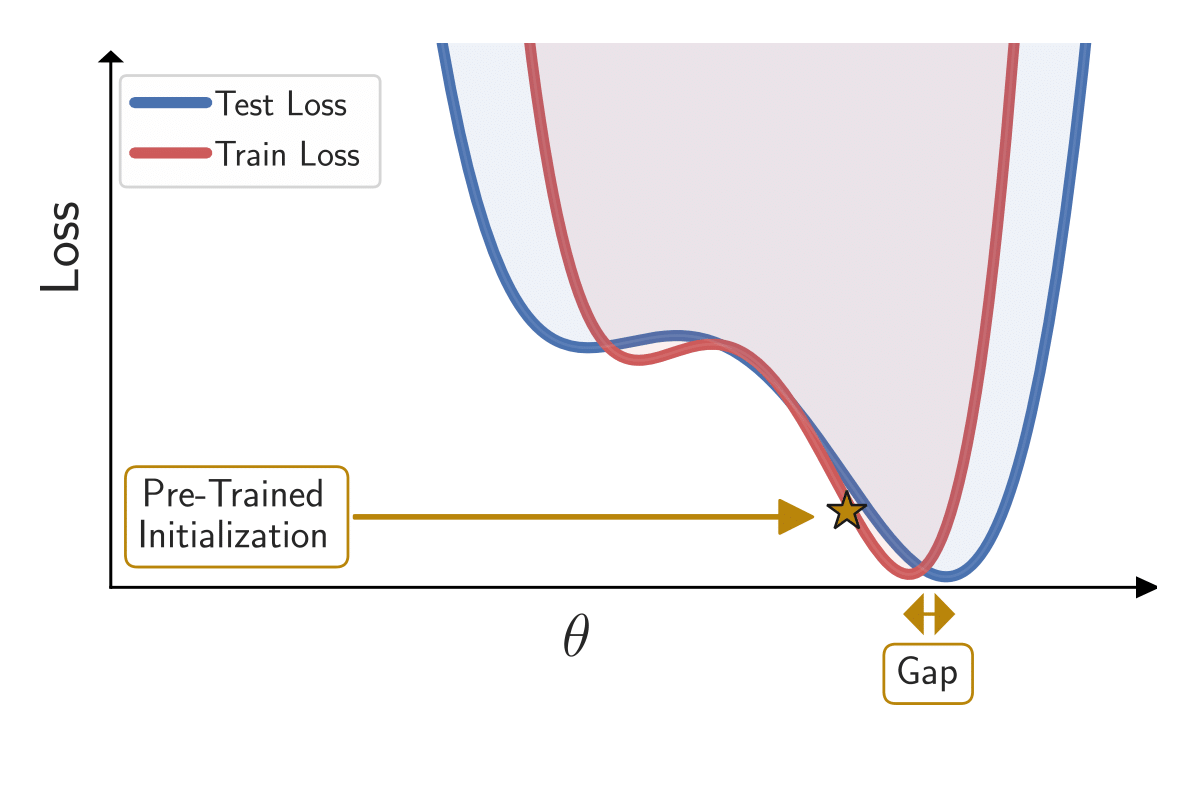

Ravid Shwartz-Ziv, Micah Goldblum, Hossein Souri, Sanyam Kapoor, Chen Zhu, Yann LeCun, Andrew Gordon Wilson NeurIPS, 2022 PDF / arXiv / code Our Bayesian transfer learning framework transfers knowledge from pre-training to downstream tasks. To up-weight parameter settings consistent with a pre-training loss function, we fit a probability distribution over the parameters of feature extractors to a pre-training loss function and rescale it as a prior. |

|

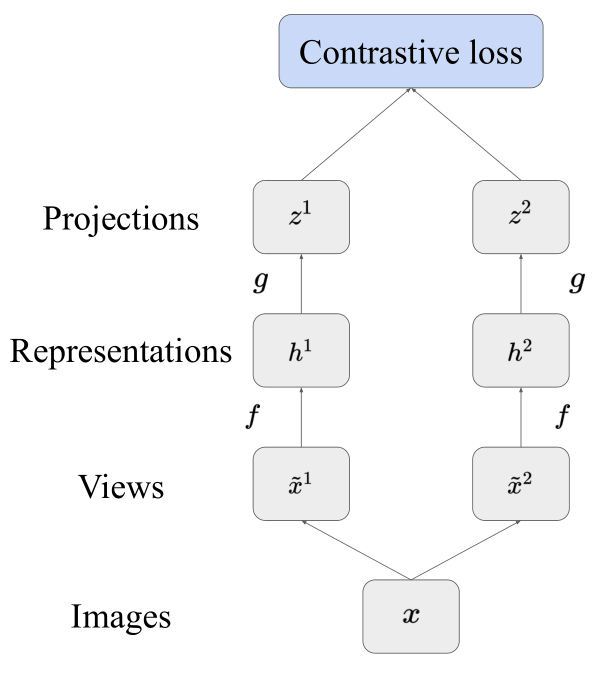

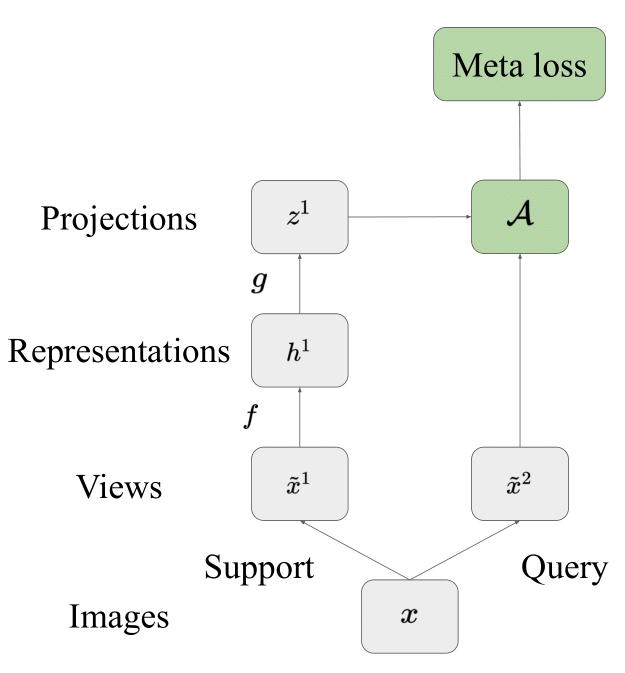

Renkun Ni, Manli Shu, Hossein Souri, Micah Goldblum, Tom Goldstein ICLR, 2022 PDF / arXiv / code In this paper, we discuss the close relationship between contrastive learning and meta-learning under a certain task distribution. We complement this observation by showing that established meta-learning methods, such as Prototypical Networks, achieve comparable performance to SimCLR when paired with this task distribution. |

|

Jiang Liu, Chun Pong Lau, Hossein Souri, Soheil Feizi, Rama Chellappa IEEE TIFS, 2022 IEEE / PDF / arXiv In this paper, we propose mutual adversarial training (MAT), in which multiple models are trained together and share the knowledge of adversarial examples to achieve improved robustness. MAT allows robust models to explore a larger space of adversarial samples, and find more robust feature spaces and decision boundaries. |

|

Pirazh Khorramshahi*, Hossein Souri*, Rama Chellappa, Soheil Feizi arXiv, 2020 PDF / arXiv GANs often suffer from the mode collapse issue where the generator fails to capture all existing modes of the input distribution. To tackle this issue, we take an information-theoretic approach and maximize a variational lower bound on the entropy of the generated samples to increase their diversity. We call this approach GANs with Variational Entropy Regularizers (GAN+VER). |

|

Prithviraj Dhar, Joshua Gleason, Hossein Souri, Carlos D. Castillo, Rama Chellappa arXiv, 2020 PDF / arXiv A novel approach called "Adversarial Gender De-biasing (AGD)" to help mitigate gender bias in face recognition by reducing the strength of gender information in face recognition features. Updated Version |

|

Chun Pong Lau, Hossein Souri, Rama Chellappa FG, 2019 (Oral Presentation) IEEE / PDF / arXiv In this work we propose a generative single frame restoration algorithm which disentangles the blur and deformation due to turbulence and reconstructs a restored image. |

|

|

|

|

Last update: Sept, 2024 |